Nurse Practitioners' Substitution for Physicians

Thursday, February 18, 2016 at 8:00AM

Thursday, February 18, 2016 at 8:00AM Richard A. Robbins, MD

Phoenix Pulmonary and Critical Care Research and Education Foundation

Gilbert, AZ USA

Abstract

Background: To deal with a physician shortage and reduce salary costs, nurse practitioners (NPs) are seeing increasing numbers of patients especially in primary care. In Arizona, SB1473 has been introduced in the state legislature which would expand the scope of practice for NPs and nurse anesthetists to be fully independent practitioners. However, whether nurses provide equal quality of care at similar costs is unclear.

Methods: Relevant literature was reviewed and physician and nurse practitioner education and care were compared. Included were study design and metrics, quality of care, and efficiency of care.

Results: NP and physicians differ in the length of education. Most clinical studies comparing NP and physician care were poorly designed often comparing metrics such as patient satisfaction. While increased care provided by NPs has the potential to reduce direct healthcare costs, achieving such reductions depends on the particular context of care. In a minority of clinical situations, NPs appear to have increased costs compared to physicians. Savings in cost depend on the magnitude of the salary differential between doctors and NPs, and may be offset by lower productivity and more extensive testing by NPs compared to physicians.

Conclusions: The findings suggest that in most primary care situations NPs can produce as high quality care as primary care physicians. However, this conclusion should be viewed with caution given that studies to assess equivalence of care were poor and many studies had methodological limitations.

Physician Compared to NP Education

Physicians have a longer training process than NPs which is based in large part on history. In 1908 the American Medical Association asked the Carnegie Foundation for the Advancement of Teaching to survey American medical education, so as to promote a reformist agenda and hasten the elimination of medical schools that failed to meet minimum standards (1). Abraham Flexner was chosen to prepare a report. Flexner was not a physician, scientist, or a medical educator but operated a for-profit school in Louisville, KY. At that time, there were 155 medical schools in North America that differed greatly in their curricula, methods of assessment, and requirements for admission and graduation.

Flexner visited all 155 schools and generalized about them as follows: "Each day students were subjected to interminable lectures and recitations. After a long morning of dissection or a series of quiz sections, they might sit wearily in the afternoon through three or four or even five lectures delivered in methodical fashion by part-time teachers. Evenings were given over to reading and preparation for recitations. If fortunate enough to gain entrance to a hospital, they observed more than participated."

At the time of Flexner's survey many American medical schools were small trade schools owned by one or more doctors, unaffiliated with a college or university, and run to make a profit. Only 16 out of 155 medical schools in the United States and Canada required applicants to have completed two or more years of university education. Laboratory work and dissection were not necessarily required. Many of the instructors were local doctors teaching part-time, whose own training often left something to be desired. A medical degree was typically awarded after only two years of study.

Flexner used the Johns Hopkins School of Medicine as a model. His 1910 report, known as the Flexner report, issued the following recommendations:

- Reduce the number of medical schools (from 155 to 31);

- Reduce the number of poorly trained physicians;

- Increase the prerequisites to enter medical training;

- Train physicians to practice in a scientific manner and engage medical faculty in research;

- Give medical schools control of clinical instruction in hospitals;

- Strengthen state regulation of medical licensure.

Flexner recommended that admission to a medical school should require, at minimum, a high school diploma and at least two years of college or university study, primarily devoted to basic science. He also argued that the length of medical education should be four years, and its content should be to recommendations made by the American Medical Association in 1905. Flexner recommended that the proprietary medical schools should either close or be incorporated into existing universities. Medical schools should be part of a larger university, because a proper stand-alone medical school would have to charge too much in order to break even financially.

By and large medical schools followed Flexner's recommendations. An important factor driving the mergers and closures of medical schools was that all state medical boards gradually adopted and enforced the Report's recommendations. As a result the following consequences occurred (2):

- Between 1910 and 1935, more than half of all American medical schools merged or closed. This dramatic decline was in some part due to the implementation of the Report's recommendation that all "proprietary" schools be closed, and that medical schools should henceforth all be connected to universities. Of the 66 surviving MD-granting institutions in 1935, 57 were part of a university.

- Physicians receive at least six, and usually eight, years of post-secondary formal instruction, nearly always in a university setting;

- Medical training adhered closely to the scientific method and was grounded in human physiology and biochemistry;

- Medical research adhered to the protocols of scientific research;

- Average physician quality increased significantly.

The Report is now remembered because it succeeded in creating a single model of medical education, characterized by a philosophy that has largely survived to the present day.

Today, physicians usually have a college degree, 4 years of medical school and at least 3 years of residency. This totals 11 years after high school.

The history of NP education is much more recent. A Master of Science in Nursing (MSN) is the minimum degree requirement for becoming a NP (3). This usually requires a bachelor of science in nursing and approximately 18 to 24 months of full-time study. Nearly all programs are University-affiliated and most faculty are full-time. The curricula are standardized.

NPs have a Bachelor of Science in Nursing followed by 1 1/2 to 2 years of full-time study. This totals 5 1/2 to 6 years of education after high school.

Differences and Similarities Between Physician and NP Education

Curricula for both physicians and nurses are standardized and scientifically based. The length of time is considerably longer for physicians (about 11 years compared to 5 1/2-6 years). There are also likely differences in clinical exposure. Minimal time for a NP is 500 hours of supervised, direct patient care (3). Physicians have considerably more clinical time. All physicians are required to do at least 3 years of post-graduate education after medical school. Time is now limited to 70 hours per week but older physicians can remember when 100+ hour weeks were common. Given a conservative estimate of 50 hours/week for 48 weeks/year this would give physicians a total of 7200 hours over 3 years at a minimum.

Hours of Education and Outcomes

The critical question is whether the number of hours NPs spend in education is sufficient. No studies were identified examining the effect of number of hours of NP education on outcomes. However, the impact of recent resident duty hour restrictions may be relevant.

Resident Duty Hour Regulations

There are concerns about the reduction in resident duty hours. The idea between the duty hour restriction was that well rested physicians would make fewer mistakes and spend more time studying. These regulations resulted in large part from the infamous Libby Zion case, who died in New York at the age of 18 under the care a resident and intern physician because of a drug-drug reaction resulting in serotonin syndrome (4). It was alleged that physician fatigue contributed to Zion's death. In response, New York state initially limited resident duty hours to 80 per week and this was followed in July 2003 by the Accreditation Council for Graduate Medical Education adopted similar regulations for all accredited medical training institutions in the United States. Subsequently, duty hours were shortened to 70 hours/week in 2011.

The duty hour regulations were adopted despite a lack of studies on their impact and studies are just beginning to emerge. A recent meta-analysis of 27 studies on duty hour restriction, demonstrated no improvements in patient care or resident well-being and a possible negative impact on resident education (5). Similarly, an analysis of 135 articles also concluded here was no overall improvement in patient outcomes as a result of resident duty hour restrictions; however, some studies suggest increased complication rates in high-acuity patients (6). There was no improvement in education, and performance on certification examinations has declined in some specialties (5,6). Survey studies revealed a perception of worsened education and patient safety but there were improvements in resident wellness (5,6).

Although the reasons for the lack of improvement (and perhaps decline) in outcomes with the resident duty hour restriction are unclear, several have speculated that the lack of continuity of care resulting from different physicians caring for a patient may be responsible (7). If this is true, it may be that the reduction in duty hours has little to do with medical education or experience but the duty hour resulted in fragmentation which caused poorer care.

Comparison Between Physician and NP Care In Primary Care

A meta-analysis by Laurant et al. (8) in 2005 assessed physician compared to NP primary care. In five studies the nurse assumed responsibility for first contact care for patients wanting urgent outpatient visits. Patient health outcomes were similar for nurses and doctors but patient satisfaction was higher with nurse-led care. Nurses tended to provide longer consultations, give more information to patients and recall patients more frequently than doctors. The impact on physician workload and direct cost of care was variable. In four studies the nurse took responsibility for the ongoing management of patients with particular chronic conditions. In general, no appreciable differences were found between doctors and nurses in health outcomes for patients, process of care, resource utilization or cost.

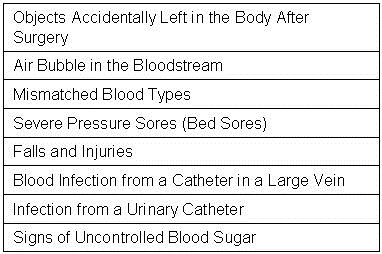

However, Laurant et al. (8) advised caution since only one study was powered to assess equivalence of care, many studies had methodological limitations, and patient follow-up was generally 12 months or less. Noted was a lower NP productivity compared to physicians (Figure 1).

Figure 1. Median ambulatory encounters per year (9).

The lower number of visits by NPs implies that cost savings would depend on the magnitude of the salary differential between physicians and nurses, and might be offset by the lower productivity of nurses compared to physicians.

More recent reviews and meta-analysis have come to similar conclusions (10-13). However, consistent with Laurant et al's. (8) warning studies tend to be underpowered, poor quality and often biased.

Despite the overall similarity in results, some studies have reported to show a difference in utilization. Hermani et al. (14) reported increased resource utilization by NPs compared to resident physicians and attending physicians in primary care at a Veterans Affairs hospital. The increase in utilization was mostly explained by increased referrals to specialists and increased hospitalizations. A recent study by Hughes et al. (15) using 2010-2011 Medicare claims found that NPs and physician assistants (PAs) ordered imaging in 2.8% episodes of care compared to 1.9% for physicians. This was especially true as the diagnosis codes became more uncommon. In other words, the more uncommon the disease, the more NPs and PAs ordered imaging tests.

NPs Outside of Primary Care

Although studies of patient outcomes in NP-directed care in the outpatient setting were few and many had methodological limitations, even fewer studies have examined NPs outside the primary care clinic. Nevertheless, NPs and PAs have long practiced in both specialty care and the inpatient setting. My personal experience goes back into the 1980s with both NPs and PAs in the outpatient pulmonary and sleep clinics, the inpatient pulmonary setting and the ICU setting. Although most articles are descriptive, nearly all articles describe a benefit to physician extenders in these areas as well as other specialty areas.

More recently NPs may have hired to fill “hospitalist” roles with scant attention as to whether the educational preparation of the NP is consistent with the role (16). According to Arizona law, a NP "shall only provide health care services within the NP's scope of practice for which the NP is educationally prepared and for which competency has been established and maintained” (A.A.C. R4-19-508 C). The Department of Veterans Affairs conducted a study a number of years ago examining nurse practitioner inpatient care compared to resident physicians care (17). Outcomes were similar although 47% of the patients randomized to nurse practitioner care were actually admitted to housestaff wards, largely because of attending physicians and NP requests. A recent article examined also NP-delivered critical care compared to resident teams in the ICU (18). Mortality and length of stay were similar.

Discussion

NP have less education and training than physicians. It would appear that the scientific basis of the curricula are similar and there is no evidence that the aptitude of nurses and physicians differ. Therefore, the data that nurses care for patients the same as physicians most of the time is not surprising, especially for common chronic diseases. However, care may be divergent for less common diseases where lack of NP training and experience may play a role.

Physicians have undergone increased training and certification over the past few decades, nurses are now doing the same. The American Association of Colleges of Nursing seems to be endorsing further education for nurses encouraging either a PhD or a Doctor of Nurse Practice degree (19). However, the trend in medicine has been contradictory requirements for increasing training and certification for physicians while substituting practitioners with less education, training and experience for those same physicians. An extension of this concept has been that traditional nursing roles are increasingly being filled by medical assistants or nursing assistants (20). The future will likely be more of the same. NPs will be substituted for physicians; nurses without advanced training will be hired to substitute for NPs and PAs; and medical assistants will increasingly be substituted for nurses all to reduce personnel costs. It is likely that studies will be designed to support these substitutions but will frequently be underpowered, use rather meaningless metrics or have other methodology flaws to justify the substitution of less qualified healthcare providers.

Much of this "dummying down" has been driven by shortage of physicians and/or nurses. The justification has always been that substitution of cheaper providers will solve the labor shortage while saving money. However, experience over the past few decades in the US has shown that as education and certification requirements increase, compensation has decreased for physicians (21). NPs can likely expect the same.

Some are asking whether physicians should abandon primary care. After years of politicians, bureaucrats and healthcare administrators promising increasing compensation for primary care, most medical students and resident physicians have realized that this is unlikely. Furthermore, the increasing intrusion of regulatory agencies and insurance companies mandating an array of bureaucratic tasks, has led to increasing dissatisfaction with primary care (22). Consequently, most young physicians are seeking training in subspecialty care. It seems apparent that it is less of a question of whether physicians will be making a choice to abandon primary care in the future, but without a dramatic change, the decision has already been made.

Arizona SB1473, the bill that would essentially make NPs equivalent to physicians in the eyes of the law, is an expected extension of the current trends in medicine. Although physicians might object, supporters of the legislation will likely accuse physicians of merely protecting their turf. Personally, I am disheartened by these trends. The current trends seem a throwback to pre-Flexner report days. The poor studies that support these trends will do little more than allow the unscrupulous to line their pockets by substituting a practitioner with less education, experience and training for a well-trained, experienced physicians or nurses.

References

- Flexner A. Medical Education in the United States and Canada: A Report to the Carnegie Foundation for the Advancement of Teaching. New York, NY: The Carnegie Foundation for the Advancement of Teaching; 1910. Available at: http://archive.carnegiefoundation.org/pdfs/elibrary/Carnegie_Flexner_Report.pdf (accessed 2/6/16).

- Barzansky B; Gevitz N. Beyond Flexner. Medical Education in the Twentieth Century. New York, NY: Greenwood Press; 1992.

- National Task Force on Quality Nurse Practitioner Education. Criteria for evaluation of nurse practitioner programs. Washington, DC: National Organization of Nurse Practitioner Faculties; 2012. Available at: http://www.aacn.nche.edu/education-resources/evalcriteria2012.pdf (accessed 2/6/16).

- Lerner BH. A case that shook medicine. Washington Post. November 28, 2006. Available at: http://www.washingtonpost.com/wp-dyn/content/article/2006/11/24/AR2006112400985.html (accessed 2/9/16).

- Bolster L, Rourke L. The effect of restricting residents' duty hours on patient safety, resident well-being, and resident education: an updated systematic review. J Grad Med Educ. 2015;7(3):349-63. [CrossRef] [PubMed]

- Ahmed N, Devitt KS, Keshet I, et al. A systematic review of the effects of resident duty hour restrictions in surgery: impact on resident wellness, training, and patient outcomes. Ann Surg. 2014;259(6):1041-53. [CrossRef] [PubMed]

- Denson JL, McCarty M, Fang Y, Uppal A, Evans L. Increased mortality rates during resident handoff periods and the effect of ACGME duty hour regulations. Am J Med. 2015;128(9):994-1000. [CrossRef] [PubMed]

- Laurant M, Reeves D, Hermens R, Braspenning J, Grol R, Sibbald B. Substitution of doctors by nurses in primary care. Cochrane Database Syst Rev. 2005 Apr 18;(2):CD001271. [CrossRef]

- Medical Group Management Association. NPP utilization in the future of US healthcare. March 2014. Available at: https://www.mgma.com/Libraries/Assets/Practice%20Resources/NPPsFutureHealthcare-final.pdf (accessed 2/17/16).

- Tappenden P, Campbell F, Rawdin A, Wong R, Kalita N. The clinical effectiveness and cost-effectiveness of home-based, nurse-led health promotion for older people: a systematic review. Health Technol Assess. 2012;16(20):1-72. [CrossRef] [PubMed]

- Donald F, Kilpatrick K, Reid K, et al. A systematic review of the cost-effectiveness of nurse practitioners and clinical nurse specialists: what is the quality of the evidence? Nurs Res Pract. 2014;2014:896587. [CrossRef] [PubMed]

- Bryant-Lukosius D, Carter N, Reid K, et al. The clinical effectiveness and cost-effectiveness of clinical nurse specialist-led hospital to home transitional care: a systematic review. J Eval Clin Pract. 2015;21(5):763-81. [CrossRef] [PubMed]

- Kilpatrick K, Reid K, Carter N, et al. A systematic review of the cost-effectiveness of clinical nurse specialists and nurse practitioners in inpatient roles. Nurs Leadersh (Tor Ont). 2015;28(3):56-76. [PubMed]

- Hemani A, Rastegar DA, Hill C, al-Ibrahim MS. A comparison of resource utilization in nurse practitioners and physicians. Eff Clin Pract. 1999;2(6):258-65. [PubMed]

- Hughes DR, Jiang M, Duszak R Jr. A comparison of diagnostic imaging ordering patterns between advanced practice clinicians and primary care physicians following office-based evaluation and management visits. JAMA Intern Med. 2015;175(1):101-7. [CrossRef] [PubMed]

- Arizona Board of Nursing. Registered nurse practitioner (rnp) practicing in an acute care setting. Available at: https://www.pncb.org/ptistore/resource/content/faculty/AZ_SBN_RNP.pdf (accessed 2/12/16).

- Pioro MH, Landefeld CS, Brennan PF, Daly B, Fortinsky RH, Kim U, Rosenthal GE. Outcomes-based trial of an inpatient nurse practitioner service for general medical patients. J Eval Clin Pract. 2001;7(1):21-33. [CrossRef] [PubMed]

- Landsperger JS, Semler MW, Wang L, Byrne DW, Wheeler AP. Outcomes of nurse practitioner-delivered critical care: a prospective cohort study. Chest. 2015;148(6):1530-5. [CrossRef] [PubMed]

- American Association of Colleges of Nursing. DNP fact sheet. June 2015. Available at: http://www.aacn.nche.edu/media-relations/fact-sheets/dnp (accessed 2/13/16).

- Bureau of Labor Statitistics. Occupational outlook handbook: medical assistants. December 17, 2015. Available at: http://www.bls.gov/ooh/healthcare/medical-assistants.htm (accessed 2/13/16).

- Robbins RA. National health expenditures: the past, present, future and solutions. Southwest J Pulm Crit Care. 2015;11(4):176-85. [CrossRef]

- Peckham C. Physician burnout: it just keeps getting worse. Medscape. January 26, 2015. Available at: http://www.medscape.com/viewarticle/838437_3 (accessed 2/13/16).

Cite as: Robbins RA. Nurse pactitioners' substitution for physicians. Southwest J Pulm Crit Care. 2016;12(2):64-71. doi: http://dx.doi.org/10.13175/swjpcc019-16 PDF