The Potential Dangers of Quality Assurance, Physician Credentialing and Solutions for Their Improvement

Monday, October 17, 2022 at 8:00AM

Monday, October 17, 2022 at 8:00AM Richard A. Robbins, MD

Phoenix Pulmonary and Critical Care Research and Education Foundation

Gilbert, AZ USA

Abstract

The Institute of Medicine defines health care quality as "the degree to which health care services for individuals and populations increase the likelihood of desired health outcomes and are consistent with current professional knowledge”. However, defining which are the desired outcomes and current professional knowledge can be controversial. In this review article the effectiveness of quality assurance is reviewed along with pointing out some of the dangers to physicians. Since deficient quality assurance can affect credentialing, solutions for the problem are offered including an independent medical staff and election rather than appointment of the chief of staff. Solutions to expedite and ensure accuracy in credentialing are offered including use of the Interstate Medical Licensure Compact (IMLC). These solutions should lead to improved and fairer quality assurance, reduced administrative expenses, decreased fraud, and modernization of physician licensing and credentialing.

Introduction

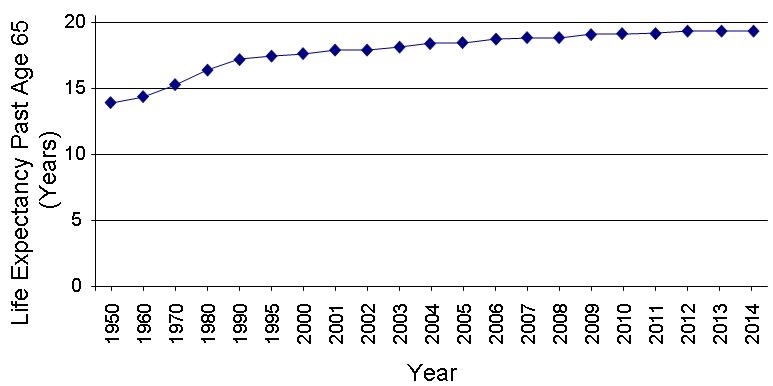

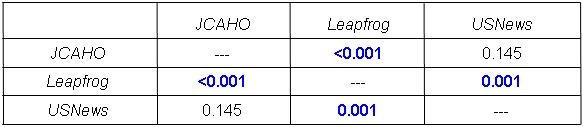

In 2013 the Southwest Journal of Pulmonary and Critical Care published a review of the history of the quality movement and quality improvement programs (quality assurance, QA) by major healthcare regulatory organizations including the Joint Commission, Institute for Healthcare Improvement, Department of Veterans Affairs, Institute of Medicine, and the Department of Health and Human Services (1). The review concluded that their measures were flawed. Although patient-centered outcomes were initially examined, these were replaced with surrogate markers. Many of the surrogate markers were weakly or nonevidence-based interventions. Furthermore, the surrogate markers were often “bundled”, some evidence-based and some not. These guidelines, surrogate markers and bundles were rarely subjected to beta testing. When carefully scrutinized, the guidelines rarely correlated with improved patient-centered outcomes. Based on this lack of improvement in outcomes, the article concluded that the quality movement had not improved healthcare.

Nearly all quality assurance articles state that playing the “blame game” where blame a person or group of people for a bad outcome is counterproductive. However, most QA programs do exactly that (2). Physicians are often bear the brunt of the blame. Witness the National Practitioner Data Bank which is little more than a physician blacklist (3). Most QA reviews point out the importance of obtaining physician buy in of the process (2). Yet most QA programs are run by nonphysicians and overseen by hospital administrators. Not surprisingly such a process has been used as a means of controlling physicians and squelching any dissent. This manuscript was undertaken as a follow-up and to point out any potential dangers of quality assurance. It seems to reinforce the principle that “not everything that counts can be counted, and not everything that can be counted counts” (4).

New Data on Quality Assurance Leading to Improvements in Patient Outcomes

There are few manuscripts that show definitive improvement in patient outcomes and many continue to use mostly meaningless metrics. However, a recent project by the Mayo Clinic is a notable exception (5). Faced with a six-quarter rise in the observed/expected inpatient mortality ratio, physicians prospectively studied a multicomponent intervention. The project leadership team attempted to implement standardized system-wide improvements while allowing individual hospitals to simultaneously pursue site-specific practice redesign opportunities. System-wide mortality was reduced from 1.78 to 1.53 (per 100 admissions; p = .01). Although the actual plan implemented was somewhat vague, it is clear that the project was physician led and was not associated with affixing blame to any physician or group of physicians. However, it may be that the program did little more than decrease the number of admissions that were at high risk for death which can lead to reduced standardized mortality (5).

Dangers of Quality Assurance

Young physicians need to be aware of the dangers of quality assurance. Although seminal publications such as “To Err Is Human” (2) often point out that efforts to fix blame rather than analyze errors are counter-productive, experience indicates that is often what is done. Medicine is rarely practiced by a sole practitioner and should patient care result in a bad outcome, the physician least valued by administration is probably who will be blamed. I would advise young physicians to be wary of admitting any wrongdoing and seeking legal counsel when appropriate. Chiefs of staff (COS) which used to be elected from the active medical staff are now appointed and serve their administrative masters rather than the medical staff they represent in name only. Furthermore, their lack of understanding of statistics, and in some cases medicine, can make their actions dangerous. In many instances they are not interested in reasoning or explanation but in action to make the “numbers right”. Any explanation is often viewed as a mere excuse for poor performance.

Below are some examples of quality assurance being used for physician control rather than improving care. These dangers are not mentioned in reviews of QA. I personally have witnessed each and remain concerned that we perpetuate the notion that quality assurance is a positive thing that “weeds out” bad physicians. As physicians are increasingly employed by hospitals, this may become more of a problem.

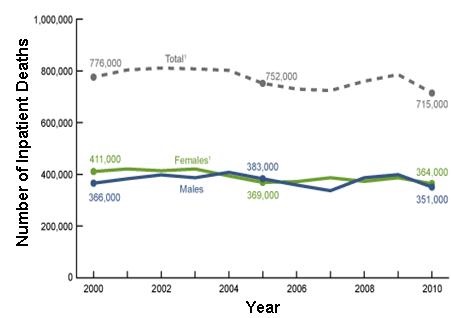

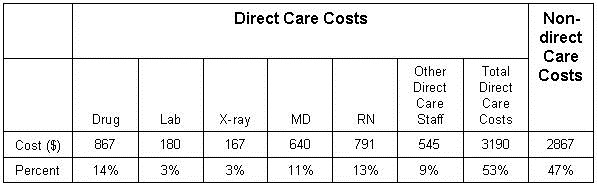

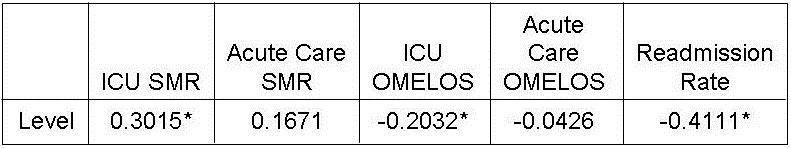

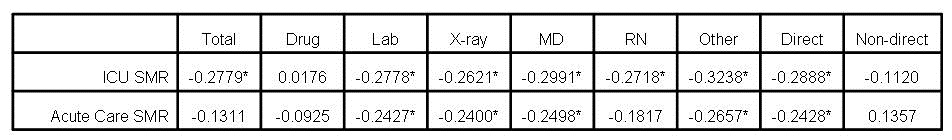

Mortality

Mortality rates, especially in small population areas of the hospital are particularly subject to manipulation. For example, a small ICU might admit some patients more appropriately cared for in a hospice. If this care results in 1 or 2 excess deaths in a month because of these inappropriate admissions, the standardized mortality for a small ICU can easily rise above 1.2 (number of deaths/expected deaths) which is usually used as a cutoff for excess mortality (6,7). If 1 or 2 doctors are responsible for these patients, a superficial review might conclude that poor care resulted in the excess deaths. At the Phoenix VA we were faced with a high mortality in the ICU. In those days the ICU was used as a hospice because of understaffing of some medicine floors making quality care for dying patients difficult. By denying admission of those patients to the ICU, we were able to reduce ICU mortality to acceptable standards (Robbins RA, unpublished observations).

Similar principles can be applied to surgical or procedure mortality. Administrators have been known to scrutinize surgical mortality or focus on complications which may or may not have arisen from the operation as excuses for replacing or restricting physicians. My personal examples include examining the outcomes of a thoracic surgeon who operated at multiple hospitals. Because one hospital wanted more operations done at their hospital, a review of surgical mortality was initiated with the idea that the physician could be replaced with a physician willing to do the bulk of their operations at the review requesting hospital.

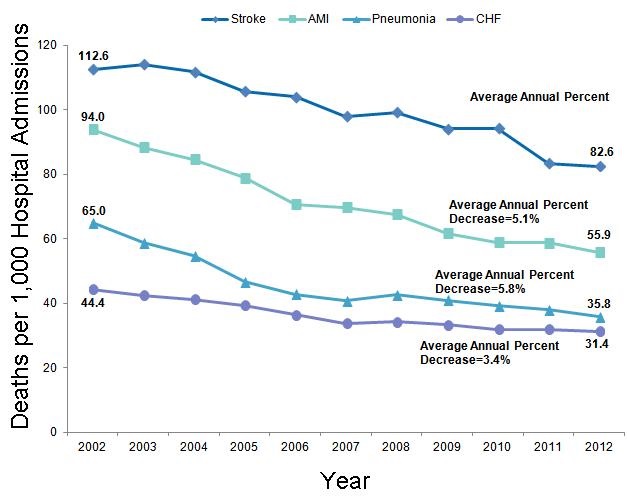

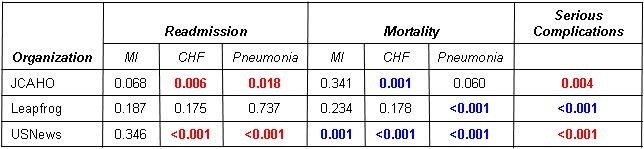

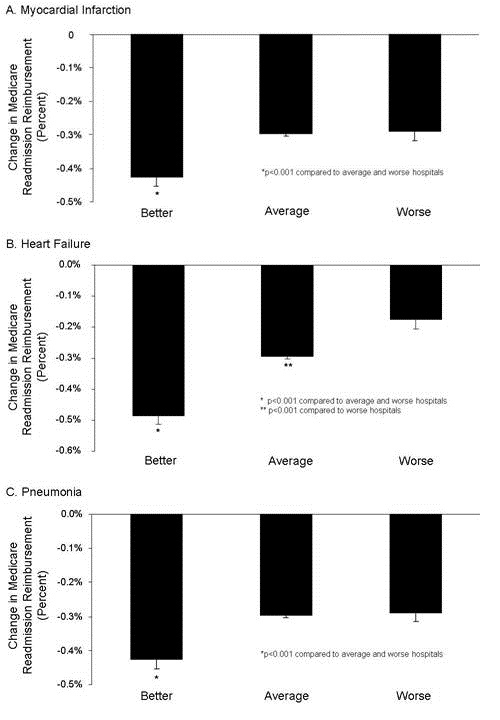

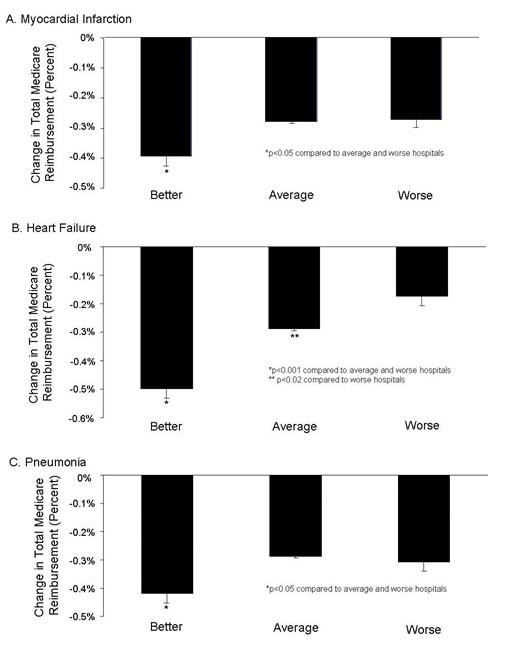

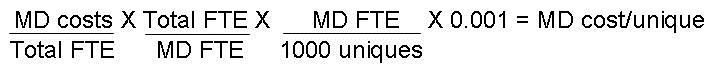

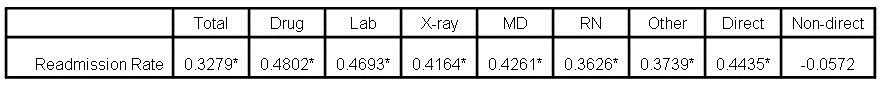

Hospital Readmissions

Reduction in hospital readmission has been touted not only as a quality measure, but also in reducing healthcare costs. The Affordable Care Act (ACA) established the Hospital Readmission Reduction Program (HRRP) in 2012. Under this program, hospitals are financially penalized if they have higher than expected risk-standardized 30-day readmission rates for acute myocardial infarction, heart failure, and pneumonia. The HRRP has garnered significant attention. However, readmissions are sometimes quite appropriate. The HRRP has shown that readmissions have decreased but at the cost of higher mortality at least for some common conditions including pneumonia, myocardial infarction and heart failure (8,9).

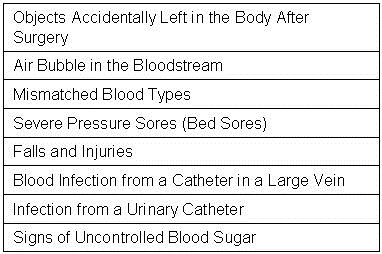

Hospital-Acquired Infections

It has long been known that hospital-acquired infections are the final cause of death in many severely ill patients (10). Patients cared for several days to weeks in the ICU often develop line sepsis, ventilator-associated pneumonia, or catheter-associated urinary tract infections. How to prevent these infections is unclear (1). Nevertheless, CMS initiated the Hospital-Acquired Condition Reduction Program. With their usual definiteness, CMS announced that their program had saved 8000 lives and reduced expenditures by 2.9 billion dollars (11). However, these claims are based on extrapolated data and there appears to be no data that inpatient hospital deaths declined or that expenditures decreased. Some explanation illustrated by the following example is probably appropriate. Suppose a patient with advanced lung cancer is admitted to the ICU and intubated while awaiting chemo or immunotherapy. However, the therapy is ineffective and after 7 days the patient succumbs to an apparent ventilator-associated pneumonia (VAP). Under CMS data the patient would not die if they had not developed pneumonia which is clearly not true. This and similar extrapolations make the CMS data unreliable.

At the Phoenix VA ICU, we had a high incidence of VAP almost certainly because we were very aggressive in diagnosis. We would do bronchoscopy with bronchoalveolar lavage and quantitative cultures to diagnose ventilator-associated pneumonia (12). However, rather than our efforts being acknowledged we were threatened because our high incidence of VAP combined with our high mortality only illustrated that we were “bad” physicians according to the then COS, Raymond Chung. He brought in an outside consultant who advised us to do tight control of glucose which would have further increased our mortality (13). We resolved the problems by decreasing the use of the ICU as a hospice as previously mentioned and by eliminating the diagnosis of VAP in the ICU. We simply quit doing bronchoscopy with BAL for diagnosis of VAP and forbade our students, residents and fellows from mentioning VAP in their notes. Our VAP rate went to zero.

Patient Wait Times

The falsification of wait times by the Department of Veterans Affairs has been well documented (14). What is less well known is that over 70% of Veterans Affairs medical centers participated in this fraud (15). What is not discussed is that VA administrators were well aware that they were falling short and assigning more patients to providers than their guidelines direct. Furthermore, when the scandal became apparent, they tried to blame long wait times on “lazy doctors” (16). At the epicenter of the wait scandal the COS at the Phoenix VA, Raymond Chung, had been aware of long wait times but kept physicians ignorant of the extent of the problem. Furthermore, in the pulmonary and critical care section the percentage of our patients waiting over 14 days was very small (<1%) and most were due to patient requests (Robbins RA, unpublished observations). However, Dr. Chung wanted to hold meetings with me to discuss the poor performance of the pulmonary and critical care section until we were started publishing our results in an email form and comparing them to other sections.

Challenging the Hospital Administration

The sad tale of how the firing of the night time janitor led to a maggot infestation at the Kansas City VA is well documented (17). What is not as well documented is what happened in the aftermath. The hospital director who fired the janitor, Hugh Doran, had already resigned from the VA because of a scandal involving him soliciting prostitution on “John TV”. However, his colleagues apparently took exception to Dr. Steve Klotz publishing his investigation of the maggot infestation in a scientific journal (18). Dr. Klotz’s Merit Review which he held for over 20 years was not renewed and he left the VA heading the HIV clinic at the University of Arizona and eventually becoming head of the infectious disease section.

Solutions

Quality assurance should be the function of an independent medical staff. Businessmen are not trained in medicine, have no practical medical experience and do not have the statistical background to determine sources of problems or the best remedies to care-related problems. The medical staff needs to be independent. A medical staff hired by the hospital most likely serves the financial concerns of the hospital administration.

Chief of Staff

The COS should be involved in the quality assurance process but only if they clearly serve the patient and the medical staff. The COS is now either appointed or approved by the hospital administration. They are no longer the doctors’ representative to the hospital administration but rather the hospital administration’s representative to the doctors. The concept that the COS can work in a “kumbaya” relationship with hospital administrators is a naive remanent of a bygone era. Although a good working relationship may exist in some healthcare organizations, the increasing numbers of suits by physicians suggests it is no longer a given that the doctors and the hospital administration work together. Furthermore, as illustrated by the examples above, the administration cannot be trusted to be fair to the individual physician.

Credentialing

Similar to QA, credentialing should be a function of the medical staff. Credentialing is the process by which the education, training, licensure, registrations and certifications, sanctions, as well as work history, including malpractice litigation, are documented and approved by the medical facility where the physician intends to provide care. In the credentialing process, many of the same documents required for state licensure are reverified; recredentialing must be periodically performed, up to every 3 years, with elements subject to change reverified. The COVID-19 pandemic has shown how that the status of our current state licensure and individual hospital credentialing procedures is unwieldly and painfully slow (19). During the pandemic various states were in desperate need of additional physicians to care for critically ill patients. Because physician licensure is by state, states had to waive this requirement to hire physicians licensed in other states. In addition, hospitals had to implement their disaster plans to streamline credentialing requirements to bring on additional physicians whether from in-state or out-of-state.

By allowing physicians licensed in one state to practice in another, and using disaster credentialing standards, NYC Health + Hospitals was able to staff up to meet urgent needs during the pandemic (20). To strengthen the ability of the US to respond to future crises, better allocate medical personnel to areas of need and also reduce administrative costs, permanent ways of enabling physicians to practice in any state are needed, such as a national physician license. The requirements for obtaining a state license are essentially the same (i.e., graduation from medical school and passage of a federal licensure test) across the country (19). Also, although there are regional differences in medical care, they are not by design. The Department of Veterans Affairs already accepts any valid state license to practice in any of its facilities (federal laws supersede state laws) and the system works well. Nonetheless, state licensure has deep roots in the tenth amendment of the Constitution, provides revenue to state governments and medical boards, and at times seeks to prevent competition from related health professions (19).

Given that a national license is not imminent, Mullangi et al. (20) have proposed a good intermediate step: build on the Interstate Medical Licensure Compact (IMLC). At present, more than 25 states have joined the compact and agreed to the same licensure requirements and to accept each other’s review of the applicants (21). If the federal government were to require all states to join the compact, a licensed physician could expediently obtain a new state license as opposed to each state medical board verifying credentials as well as other requirements).

However, even if the US had a national physician license at the time that COVID-19 hit, hospitals would still have had to invoke their disaster plans to waive usual credentialing processes and immediately employ the physicians needed to staff for the pandemic. A key obstacle with credentialing is the requirement that each entity (hospitals and insurance plans) independently verifies credentials. In practical terms, no matter how many hospitals a physician has worked in, no matter how many states in which he or she holds a medical license in good standing, no matter how many insurance plans have previously enrolled the physician, each hospital or insurance plan must independently verify the credentials. It is this redundancy that causes the long delays between when a physician accepts a position and when he or she can begin work and/or bill for services. Health care networks sharing credentialing elements among its member facilities.

A more robust method for reducing inefficiencies and increasing accountability in medical credentialing is to have a single, National source physician credentialing. At present, there are limited efforts in this direction. There are already a number of repositories to verify medical credentials in full or part including The Federation of State Medical Boards the Drug Enforcement Administration, the American Medical Association, the National Practitioner Data Bank and many credential verification organizations that will check credentials for a price to name just a few.

Implementing these proposals would not necessarily require a government subsidy. Individual physicians could pay to register in exchange for not having to submit their materials and medical education and practice histories multiple times. Hospitals and insurers could pay to access the system. Having a single national repository would not only smooth staffing burdens during either a pandemic or normal operations, but has been estimated to save more than $1 billion annually. Potentially, to be verified physicians would not even need to fill out forms with their professional information. Once their identity was confirmed, information would simply be downloaded onto a common form from the database.

Conclusions

There are numerous dangers to physicians in the QA process because the process is controlled by unqualified administrators unfamiliar with medical practice. Making QA a function of an independent medical staff rather than the hospital administration could potentially resolve many of these dangers. The COVID-19 pandemic has shown that the current US system of state licensure and hospital-based credentialing precludes the rapid hiring and credentialing of physicians. These experiences suggest solutions to more rapidly and flexibly deploy our physician workforce, decrease delays and administrative expenses, reduce fraud, and modernize physician licensing and credentialing.

References

- Robbins RA. The unfulfilled promise of the quality movement. Southwest J Pulm Crit Care. 2014;8(1):50-63. [CrossRef]

- Institute of Medicine (US) Committee on Quality of Health Care in America. To Err is Human: Building a Safer Health System. Kohn LT, Corrigan JM, Donaldson MS, editors. Washington (DC): National Academies Press (US); 2000. [PubMed]

- Health Resources and Services Administration (HRSA), HHS. National Practitioner Data Bank for Adverse Information on Physicians and Other Health Care Practitioners: reporting on adverse and negative actions. Final rule. Fed Regist. 2010 Jan 28;75(18):4655-82. [PubMed]

- Mason D. Not Everything That Counts Can be Counted. Nov 12, 2013. Available at: https://medium.com/@visualizechange/not-everything-that-counts-can-be-counted-8cdeb6deafe8 (accessed 10/16/22).

- Mueller JT, Thiemann KMB, Lessow C, Murad MH, Wang Z, Santrach P, Poe J. The Mayo Clinic Hospital Mortality Reduction Project: Description and Results. J Healthc Manag. 2020 Mar-Apr;65(2):122-132. [CrossRef] [PubMed]

- Pollock BD, Herrin J, Neville MR, Dowdy SC, Moreno Franco P, Shah ND, Ting HH. Association of Do-Not-Resuscitate Patient Case Mix With Publicly Reported Risk-Standardized Hospital Mortality and Readmission Rates. JAMA Netw Open. 2020 Jul 1;3(7):e2010383. [CrossRef] [PubMed]

- Nicholls A. The Standardised Mortality Ratio and How to Calculate It. August 26, 2020. Available at: https://s4be.cochrane.org/blog/2020/08/26/the-standardised-mortality-ratio-and-how-to-calculate-it/ (accessed 9/15/22).

- Robbins RA, Gerkin RD. Comparisons between Medicare mortality, morbidity, readmission and complications. Southwest J Pulm Crit Care. 2013;6(6):278-86.

- Gupta A, Fonarow GC. The Hospital Readmissions Reduction Program-learning from failure of a healthcare policy. Eur J Heart Fail. 2018 Aug;20(8):1169-1174. [CrossRef] [PubMed]

- Feingold DS. Hospital-acquired infections. N Engl J Med. 1970 Dec 17;283(25):1384-91. [CrossRef] [PubMed]

- CMS. Declines in Hospital-Acquired Conditions Save 8,000 Lives and $2.9 Billion in Costs. Jun 05, 2018. Available at: https://www.cms.gov/newsroom/press-releases/declines-hospital-acquired-conditions-save-8000-lives-and-29-billion-costs (accessed 9/24/22).

- Horonenko G, Hoyt JC, Robbins RA, Singarajah CU, Umar A, Pattengill J, Hayden JM. Soluble triggering receptor expressed on myeloid cell-1 is increased in patients with ventilator-associated pneumonia: a preliminary report. Chest. 2007 Jul;132(1):58-63. [CrossRef] [PubMed]

- NICE-SUGAR Study Investigators, Finfer S, Chittock DR, Su SY, Blair D, Foster D, Dhingra V, Bellomo R, Cook D, Dodek P, Henderson WR, Hébert PC, Heritier S, Heyland DK, McArthur C, McDonald E, Mitchell I, Myburgh JA, Norton R, Potter J, Robinson BG, Ronco JJ. Intensive versus conventional glucose control in critically ill patients. N Engl J Med. 2009 Mar 26;360(13):1283-97. [CrossRef] [PubMed]

- Oppel RA Jr, Shear MD. Severe Report Finds V.A. Hid Waiting Lists at Hospitals. NY Times. May 28, 2014. Available at: https://www.nytimes.com/2014/05/29/us/va-report-confirms-improper-waiting-lists-at-phoenix-center.html (accessed 9/30/22).

- Office of VA Inspector General. Review of alleged patient deaths, patient wait times, and scheduling practices at the Phoenix VA health care system. Available at: http://www.va.gov/oig/pubs/VAOIG-14-02603-267.pdf (accessed 9/30/22).

- Robbins RA. Patient deaths blamed on long waits at the Phoenix VA. Southwest J Pulm Crit Care. 2014;8(4):227-8. [CrossRef]

- Robbins RA. Profiles in medical courage: of mice, maggots and Steve Klotz. Southwest J Pulm Crit Care 2012;4:71-7. Available at: https://www.swjpcc.com/general-medicine/2012/3/30/profiles-in-medical-courage-of-mice-maggots-and-steve-klotz.html (accessed 9/26/22).

- Beckendorf R, Klotz SA, Hinkle N, Bartholomew W. Nasal myiasis in an intensive care unit linked to hospital-wide mouse infestation. Arch Intern Med. 2002 Mar 25;162(6):638-40. [CrossRef] [PubMed]

- Bell DL, Katz MH. Modernize Medical Licensing, and Credentialing, Too—Lessons From the COVID-19 Pandemic. JAMA Intern Med. 2021;181(3):312–315. [CrossRef] [PubMed]

- Mullangi S, Agrawal M, Schulman K. The COVID-19 Pandemic-An Opportune Time to Update Medical Licensing. JAMA Intern Med. 2021 Mar 1;181(3):307-308. [CrossRef] [PubMed]

- Steinbrook R. Interstate medical licensure: major reform of licensing to encourage medical practice in multiple states. JAMA. 2014;312(7):695-696. [CrossRef] [PubMed]

Cite as: Robbins RA. The Potential Dangers of Quality Assurance, Physician Credentialing and Solutions for Their Improvement. Southwest J Pulm Crit Care Sleep. 2022;25(4):52-58. doi: https://doi.org/10.13175/swjpccs044-22 PDF